1. Development background of machine learning: artificial intelligence

Artificial intelligence (ArTIficial Intelligence, abbreviated as AI) is a new discipline that simulates human consciousness and thinking processes. Today, artificial intelligence has become a reality from the illusory scientific fantasy. Computer scientists have made major breakthroughs in the fields of machine learning and deep learning, the core of artificial intelligence. Machines are given powerful cognitive and predictive capabilities. Looking back at history, in 1997, IBM "Dark Blue" defeated chess champion Kasparov; in 2011, IBM Waston with machine learning capabilities participated in a variety show to win $ 1 million; in 2016, Aplphago using deep learning training Successfully defeated the human world champion. Various events show that machines can also think like humans, and even do better than humans.

At present, artificial intelligence has been widely used in finance, medical care, manufacturing and other industries. Global investment has soared from US $ 589 million in 2012 to more than US $ 5 billion in 2016. McKinsey predicts that the total value of the artificial intelligence application market will reach $ 127 billion by 2025. At the same time, McKinsey conducted an in-depth analysis of the investment in the artificial intelligence market in 2016 and found that nearly 60% of capital mergers and acquisitions are arranged around machine learning. Among them, software-based machine learning startups are more popular with investments than machine-based robotics companies. From 2013 to 2016, the compound annual growth rate of investment in this area reached about 80%. This shows that machine learning has become the main direction of the development of artificial intelligence technology.

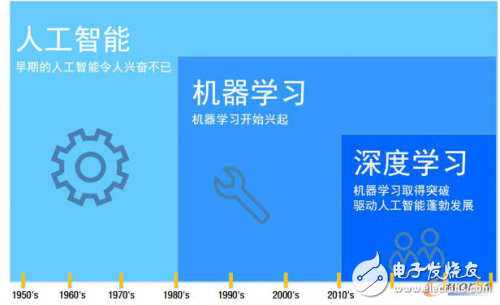

Second, the relationship between machine learning and artificial intelligence, deep learning

Before introducing machine learning, it is necessary to sort out the relationship between artificial intelligence, machine learning and deep learning. The most common divisions in the industry are:

Artificial intelligence uses a completely different working mode from traditional computer systems. It can read massive amounts of "big data" based on a common learning strategy and discover rules, connections, and insights from it. Therefore, artificial intelligence can automatically adjust based on new data. No need to reset the program.

Machine learning is the core technology of artificial intelligence research. Under the support of big data, various algorithms allow machines to perform deep statistical analysis of data for "self-study"; using machine learning, artificial intelligence systems have obtained inductive reasoning and decision-making Ability; and deep learning pushes this ability to a higher level.

Deep learning is a type of machine learning algorithm, which belongs to the artificial neural network system. Now the best performing machine learning in many application fields is designed based on neural networks that imitate the structure of the human brain. These computer systems can be completely autonomous. Learn, discover, and apply rules locally. Compared with other methods, it performs better in solving more complex problems. Deep learning is a way to help machines achieve "independent thinking".

In short, artificial intelligence is an important driving force for social development, and machine learning, especially deep learning technology is the core of the development of artificial intelligence, the relationship between the three of them is contained and contained. As shown in Figure 1.

Figure 1 The relationship between artificial intelligence, machine learning, and deep learning

3. Machine learning: an efficient way to achieve artificial intelligence

In a broad sense, machine learning is a method that can give machine learning the ability to perform functions that direct programming cannot. But in a practical sense, machine learning is a method that uses data to train a model and then uses the model to predict. Some foreign scholars have defined machine learning in much the same way. Some scholars believe that machine learning is the study of computer algorithms that can be automatically improved by experience; some scholars believe that machine learning refers to the use of data or past experience to optimize computer programs Performance standards. It can be seen that machine learning is the study of improving algorithms through experience or data. Through the algorithm, the machine learns laws from a large amount of historical data, obtains a certain model and uses this model to predict the future. During the learning process, the machine processes the data The more, the more accurate the prediction result.

Machine learning has a very important position in the research of artificial intelligence. It is the core of artificial intelligence and the fundamental way to make computers intelligent, and its applications are in various fields of artificial intelligence. From the 1950s, people began to study machine learning, from the initial method research based on neuron model and function approximation theory, to the generation of rule learning and decision tree learning based on symbolic calculus, and the subsequent recognition From the introduction of concepts such as induction, interpretation, and analogy in cognitive psychology, to the rise of the latest computational learning theory and statistical learning, machine learning has always played a leading role in the practical application of related disciplines. Many achievements have been made and many research directions have been differentiated, mainly including symbolic learning, connection learning and statistical learning.

(1) The development of machine learning

The development process of machine learning can be roughly divided into the following four stages:

1. Mid-50s to mid-1960s

In this period, the study is "no knowledge" learning, that is, "ignorance" learning; its research goals are various self-organizing systems and adaptive systems; its main research method is to continuously modify the control parameters of the system to improve the system Execution ability does not involve knowledge related to specific tasks. The theoretical basis guiding the research at this stage is the neural network model that has been studied as early as the 1940s. With the emergence and development of electronic computers, the realization of machine learning becomes possible. The research at this stage led to the birth of the new science of pattern recognition, and at the same time formed two important methods of machine learning, namely discriminant function method and evolutionary learning. Samuel's chess program is a typical example of using the discriminant function method. However, this perception-free learning system without knowledge has great limitations. Whether it is a neural model, evolutionary learning or discriminant function method, the learning results obtained are very limited, far from meeting people's expectations of machine learning systems. During this period, China has developed a digital recognition learning machine.

2. Mid-1960s to mid-1970s

The research goal of this stage is to simulate the concept learning process of human beings, and use the logical structure or graph structure as the internal description of the machine. Machines can use symbols to describe concepts (symbol concept acquisition) and make various assumptions about learning concepts. The representative work in this stage includes Winston's structural learning system and Hayes Roth's logic-based inductive learning system. Although this type of learning system has achieved great success, it can only learn a single concept, and it has not been put into practical use. In addition, the neural network learning machine turned into a low tide due to the theoretical flaws failing to achieve the expected results. Therefore, it disappoints those who have too much hope for the progress of machine learning. They called this period "the dark period".

3. Mid-70s to mid-80s

During this period, people expanded from learning a single concept to learning multiple concepts, exploring different learning strategies and various learning methods. The machine learning process is generally built on a large-scale knowledge base to achieve knowledge reinforcement learning. It is particularly encouraging that this stage has begun to integrate the learning system with various applications and has achieved great success, promoting the development of machine learning. After the emergence of the first expert learning system, the example reduction learning system became the mainstream of research, and automatic knowledge acquisition became the applied research goal of machine learning. In 1980, the first International Conference on Machine Learning was held at Carnegie Mellon University (CMU) in the United States, marking the rise of machine learning research in the world. After that, machine induction learning entered the application. In 1986, the international magazine "Machine Learning" (Machine Learning) was launched, ushering in a new era of vigorous development of machine learning. In the late 1970s, the Institute of Automation, Chinese Academy of Sciences conducted mass spectrometry analysis and pattern grammar inference research, which showed that China's machine learning research has been restored. After Simon came to China to spread the flame of machine learning in 1980, a new situation emerged in China's machine learning research.

4. The latest stage of machine learning began in 1986

On the one hand, due to the re-emergence of neural network research, the study of connection mechanism learning methods is in the ascendant. The research of machine learning has reached a new climax all over the world. The basic theory of machine learning and the research of integrated systems have been strengthened and developed. On the other hand, experimental research and applied research have received unprecedented attention, and machine learning has a stronger research method and environment. As a result, there have been hundreds of controversies such as symbol learning, neural network learning, evolutionary learning, and reinforcement learning based on behavioralism (acTIonism).

Figure 2 The development of machine learning

(2) Structural model of machine learning

The essence of machine learning is algorithms. Algorithms are a series of instructions for solving problems. The algorithms developed by programmers to guide computers in new tasks are the foundation of the advanced digital world we see today. Computer algorithms organize large amounts of data into information and services based on certain instructions and rules. Machine learning issues instructions to the computer, allowing the computer to learn from the data without requiring programmers to make new step-by-step instructions.

The basic process of machine learning is to provide training data for learning algorithms. Then, the learning algorithm generates a new set of rules based on the inference of the data. This is essentially generating a new algorithm, called a machine learning model. By using different training data, the same learning algorithm can generate different models. Inferring new instructions from data is a core advantage of machine learning. It also highlights the key role of data: the more data available to train the algorithm, the more the algorithm learns. In fact, many of the latest advances in AI are not due to radical innovations in learning algorithms, but now accumulate a large amount of available data.

Figure 3 Structural model of machine learning

(3) How machine learning works

1. Select data: first divide the original data into three groups: training data, verification data and test data;

2. Data modeling: Use training data to build models that use relevant features;

3. Verification model: Use verification data to input into the data model that has been constructed;

4. Test model: Use test data to check the performance of the verified model;

5. Use model: Use a fully trained model to make predictions on new data;

6. Tuning model: Use more data, different features or adjusted parameters to improve the performance of the algorithm.

Figure 4 How machine learning works

(4) The key cornerstones of machine learning development:

(1) Mass data: The energy source of artificial intelligence is a stable data flow. Machine learning can "train" itself with massive amounts of data before it can develop new rules to accomplish increasingly complex tasks. There are currently more than 3 billion people online, and about 17 billion connected devices or sensors, which generate a lot of data, and the reduced cost of data storage makes these data easy to use.

(2) Super computing: powerful computers and remote processing capabilities connected through the Internet make it possible to process massive amounts of data with machine learning technology. According to a certain media, the reason why ALPHGO can achieve a historic victory in the duel against Li Shishi This is inseparable from its hardware configuration of 1920 CPU and 280 GPU super computing system, which shows that computing power is crucial to machine learning.

(3) Excellent algorithms: In machine learning, learning algorithms (learning algorithms) create rules that allow computers to learn from data to infer new instructions (algorithm models), which is also the core advantage of machine learning. New machine learning technologies, especially layered neural networks, also known as "deep learning", have inspired new services and stimulated investment and research in other areas of artificial intelligence.

Figure 5 Key cornerstones of machine learning

(5) Classification of machine learning algorithms

Machine learning can usually be divided into three categories based on the different forms of learning:

1. Supervised Learning

The learning algorithm is provided with labeled data and the required output. For each input, the learner is provided with a response goal. Supervised learning is mainly used to quickly and efficiently teach the existing knowledge of AI, and is used to solve the problems of classification and regression. Common algorithms are:

(1) Decision Trees: Decision trees can be regarded as a tree-like prediction model. It classifies instances by arranging the instances from the root node to a leaf node. The leaf node is the classification to which the instance belongs. The core problem of decision tree is to choose split attributes and pruning of decision tree. A decision tree is a decision support tool that uses tree diagrams or models to represent decisions and their possible consequences, including the impact of random events, resource consumption, and usage. The decision tree used to analyze and judge the loan intention is shown in the figure. From a business perspective, it is often used for rule-based credit assessment and horse racing result prediction.

Figure 6 Decision tree

(2) Adaboost algorithm: This is an iterative algorithm whose core idea is to train different classifiers (weak classifiers) against the same training set, and then combine these weak classifiers to form a stronger final classifier (Strong classifier). The algorithm itself is realized by changing the data distribution. It determines the weight of each sample according to whether the classification of each sample in each training set is correct and the accuracy of the last overall classification. The new data with modified weights is sent to the lower classifier for training, and then the classifier obtained by each training is fused as the final decision classifier. The AdaBoost algorithm mainly solves: two types of problems, multi-class single-label problems, multi-class multi-label problems, large-class single-label problems and regression problems; advantages: the learning accuracy is significantly increased, and there will be no over-fitting problems, AdaBoost algorithm technology Commonly used in the field of face recognition and target tracking.

Figure 7 Adaboost

(3) Artificial neural network (Artificial Neural Network-ANN) algorithm: Artificial neural network is a nonlinear, adaptive information processing system composed of a large number of processing units interconnected. It is proposed on the basis of the research results of modern neuroscience, and attempts to process information by simulating the processing and memory of the neural network of the brain. Artificial neural network is a parallel distributed system. It uses a completely different mechanism from traditional artificial intelligence and information processing technology. It overcomes the shortcomings of traditional artificial intelligence based on logical symbols in processing intuitive and unstructured information. Features of self-organization and real-time learning.

Figure 8 Artificial Neural Network

(4) SVM (Support Vector Machine): The SVM method is the support vector machine algorithm, which was proposed by Vapnik et al in 1995 and has relatively excellent performance indicators. This method is a machine learning method based on statistical learning theory. SVM is a dichotomy algorithm. Suppose there is a set of points in N-dimensional space, including two types. SVM generates a (N-1) -dimensional hyperplane and divides these points into two groups. For example, you have some points on the paper, these points are linearly separated. The SVM will find a straight line, divide these points into two categories, and will stay away from these points as much as possible. In terms of scale, SVM (including properly adjusted) solves some very big problems: advertising, human gene splice site recognition, image-based gender detection, large-scale picture classification, suitable for news classification, handwriting recognition and other applications .

Figure 9 Support Vector Machine Algorithm

(5) Naive Bayesian (Naive Bayesian): Bayesian method is a pattern classification method in the case of known prior probability and class conditional probability, the classification result of the sample to be divided depends on the samples in various domains The whole. The naive Bayes classifier is based on the assumption of strong independence of applying Bayes' theorem to the relationship between features. Advantages: It is still effective when there is less data, and can handle multi-category problems. Disadvantages: more sensitive to the input data preparation method. Applicable data type: nominal data. Examples of real-life applications: secondary filtering of email spam, classification of article attributes, analysis of the content meaning and face recognition of text expressions, sentiment analysis, and consumer classification.

Figure 10 Naive Bayes algorithm

(6) K-Nearest Neighbors (KNN): This is a classification algorithm, and its core idea is that if a sample is in the feature space, most of the k most adjacent samples belong to a certain category, Then the sample also belongs to this category and has the characteristics of the sample in this category. This method determines the classification to which the samples to be classified belong based on the classification of the nearest sample or samples in determining the classification decision. The kNN method is only relevant to a very small number of adjacent samples when making category decisions. Since the kNN method mainly depends on the limited neighboring samples around, rather than the method of discriminating the class domain to determine the category, the kNN method is more preferable than other methods for the sample set to be divided or overlapped more than the class domain. As suitable.

Figure 11 K-nearest neighbor algorithm

(7) Logistic regression (LogisTIc Regression): This is a classification algorithm, mainly used for binary classification problems. Logistic regression is a very powerful statistical method that can build data with one or more explanatory variables into a binomial type model, estimate the probability by using the logistic function of the cumulative logistic distribution, measure the categorical dependent variable and one or The relationship between multiple independent variables. Logistic regression is a nonlinear regression model. Compared to linear regression, it has an additional sigmoid function (or Logistic function). Generally, the uses of regression in real life are as follows: credit evaluation, measuring marketing success, predicting the revenue of a product, whether an earthquake will occur on a specific day, road traffic flow analysis, and mail filtering.

Figure 12 Logistic regression algorithm

(8) Random Forest algorithm: Random Forest algorithm can be used to deal with regression, classification, clustering, and survival analysis. When it is used for classification or regression, its main idea is to resample by self-help method. Generate many tree regressors or classifiers. In machine learning, a random forest is a classifier that contains multiple decision trees, and its output category is determined by the mode of the category output by individual trees. It is often used for user churn analysis and risk assessment.

Figure 13 Random Forest Algorithm

(9) Linear regression (Linear Regression): This is a statistical analysis method that uses regression analysis in mathematical statistics to determine the interdependent quantitative relationship between two or more variables. It is widely used. Linear regression is the first type in regression analysis that has been rigorously studied and widely used in practical applications. This is because a model that depends linearly on its unknown parameters is easier to fit than a model that depends nonlinearly on its position parameters, and the estimated statistical properties are also easier to determine.

Figure 14 Linear regression algorithm

2. Unsupervised Learning

The data provided to the learning algorithm is unlabeled, and requires the algorithm to identify the patterns in the input data, mainly to build a model, use it to try to interpret the input data, and use it for the next input. In reality, many data sets often have a large number of unlabeled samples, but labeled samples are relatively few. If it is directly deprecated, it will largely result in low model accuracy. The solution to this situation is often to combine labeled samples and to change unlabeled samples into pseudo-labeled samples by estimation, so unsupervised learning is more difficult to master than supervised learning. Mainly used to solve clustering and dimensionality reduction problems, common algorithms are:

(1) Clustering algorithm: the task of grouping a group of objects so that objects in the same group are more similar to each other than objects in other groups. Common clustering algorithms include:

â‘ K-means algorithm: This is the representative of the typical prototype function clustering method based on the target. It is a certain distance from the data point to the prototype as the optimized target function. The method of seeking the extreme value of the function is used to obtain the adjustment rules of iterative operation. The advantage is that the algorithm is fast and simple enough, and if the preprocessing data and feature engineering are very effective, then the clustering algorithm will have extremely high flexibility. The disadvantage is that the algorithm needs to specify the number of clusters, and the choice of K value is usually not so easy to determine. In addition, if the real clusters in the training data are not spherical, then K-means clustering will result in some poorer clusters.

Figure 15 K-means algorithm

â‘¡Expectation Maximisation (EM): This is an iterative algorithm used for maximum likelihood estimation or maximum posterior probability estimation of probability parameter models containing latent variables. The main purpose of the EM algorithm is to provide a simple iterative algorithm to calculate the posterior density function. Its biggest advantage is simplicity and stability, but it is easy to fall into the local optimum.

Figure 16 EM algorithm

â‘¢Affinity Propagation clustering: AP clustering algorithm is a relatively new clustering algorithm. The clustering algorithm determines clusters based on graph distances between two sample points. The clusters adopting this clustering method have smaller and unequal sizes. Advantages: The algorithm does not need to indicate a clear number of clusters. Disadvantages: The main disadvantage of the AP clustering algorithm is that the training speed is relatively slow and requires a lot of memory, so it is difficult to expand to large data sets. In addition, the algorithm also assumes that the potential clusters are spherical.

â‘£ Hierarchical Clustering (Hierarchical Clustering): Hierarchical clustering is a series of clustering algorithms based on the following concepts: It is a hierarchical decomposition of the data set according to a certain method until a certain condition is met. According to the different classification principles, it can be divided into two methods: cohesion and splitting. Advantages: The main advantage of hierarchical clustering is that clusters no longer need to be assumed to be spherical. It can also be extended to large data sets. Disadvantages: a bit like K-means clustering, the algorithm needs to set the number of clusters.

Figure 17 Hierarchical clustering algorithm

⑤DBSCAN: This is a more representative density-based clustering algorithm. Different from partitioning and hierarchical clustering methods, it defines clusters as the largest set of points connected by density, can divide regions with sufficiently high density into clusters, and can find clusters of arbitrary shapes in the spatial database of noise. It forms a cluster of dense areas of sample points. Advantages: DBSCAN does not need to assume that the cluster is spherical, and its performance is scalable. In addition, it does not require every point to be assigned to a cluster, which reduces the abnormal data of the cluster. Disadvantages: The user must adjust the two "epsilon" and "min_sample" hyperparameters that define the cluster density. DBSCAN is very sensitive to these hyperparameters.

Figure 18 DBSCAN algorithm

(2) Dimensionality reduction algorithm: its main feature is to reduce the data from high dimensionality to low dimensionality, and retain the information of the data to the greatest extent. The representative algorithm is:

①The main representative is the principal component analysis algorithm (PCA algorithm): principal component analysis is also called principal component analysis, which aims to use the idea of ​​dimensionality reduction to convert multiple indicators into a few comprehensive indicators (ie, principal components), each of which is The components can reflect most of the original variable information, and the information contained does not overlap with each other. This method combines complex factors into several principal components while introducing multiple variables, which simplifies the problem and at the same time obtains more scientific and effective data information.

Figure 19 PCA algorithm

â‘¡ Locally linear embedding LLE LLE dimensionality reduction algorithm: a nonlinear dimensionality reduction algorithm, which can make the data after dimensionality reduction maintain the original manifold structure. The algorithm is an optimization method for the dimension of the non-linear signal feature vector. This dimension optimization is not just a simple reduction in number, but a signal in a high-dimensional space while keeping the original data properties unchanged. Mapped to low-dimensional space, that is, the second extraction of eigenvalues.

Figure 20 LLE dimensionality reduction algorithm

3. Reinforcement learning (Reinforcement Learning)

The algorithm interacts with the dynamic environment, takes the feedback of the environment as input, and selects the optimal action that can achieve its goal through learning. The mathematical principle behind reinforcement learning is slightly different from supervised / unsupervised learning. Supervised / unsupervised learning uses more statistics, while reinforcement learning combines discrete mathematics and stochastic processes. Common algorithms are:

①TD (λ) algorithm: TD (temporal differenee) learning is one of the most important learning techniques in reinforcement learning technology. TD learning is a combination of Monte Carlo ideas and dynamic programming ideas, that is, on the one hand, the TD algorithm can be learned directly from the agent experience without the need for a system model; on the other hand, the TD algorithm is similar to dynamic programming, using the estimated value function. Iterate.

Figure 21 TD (λ) algorithm

â‘¡Q_learning algorithm: Q_learning learning is a model-independent reinforcement learning algorithm, also known as off-policy TD (off-policy TD). Unlike the TD algorithm, the reward of the state_action pair and Q (s, a) are used as estimation functions in Q_learning iterations. Each agent needs to examine each behavior during each learning iteration to ensure the convergence of the learning process.

Figure 22 Q_learning algorithm

(6) An example of the machine learning process

The so-called machine learning process refers to observing a set of n sample data and predicting the nature of unknown data based on these data. Then, given the data set (so-called big data) and specific problems, the problem is generally solved. The steps can be summarized as follows:

1. Data abstraction

Abstract data sets and specific problems into mathematical language, expressed with appropriate mathematical symbols. This is naturally to facilitate the formulation and solution of problems, and it is also more intuitive.

2. Set performance metrics

Machine learning is an algorithm that generates a model. Generally speaking, models have errors. If the model is too good, consider some characteristics of the training sample as the general nature of all potential samples. This situation is called overfitting. Such a model will have a large error when facing a new sample. Professional expression The generalization performance of the model is reduced. In contrast, underfitting, the model does not learn the general properties of the sample, this situation is generally easier to solve, expand the data set or adjust the model.

3. Data preprocessing

The reason for data preprocessing is that the data sets provided are rarely used directly. For example: if the sample has too many attributes, there are generally two methods: dimensionality reduction and feature selection. Feature selection is relatively easy to understand, that is, to choose useful and relevant attributes, or to use another way of expression: to select the features in the sample that are useful and related to the problem.

4. Selected model

When the data set is perfect, the next step is to select the appropriate model based on the specific problem. One way is to consider whether there are labeled samples. If it is a labeled sample, you can consider supervised learning, otherwise it is unsupervised learning, and both will depend on whether semi-supervised learning comes in handy.

5. Training and optimization

With the model selected, how to train and optimize is also an important issue. If you want to evaluate the division effect of the training set and the verification set, the common methods are set aside, cross-validation, self-help method, and model adjustment. If the model calculation time is too long, you can consider pruning. Introduce regularization terms to suppress (compensation principle) If a single model is not effective, you can integrate multiple learners through a certain strategy to combine strengths and weaknesses (integrated learning)

6. Machine learning example analysis

In the field of machine learning, features are more important than models (learning algorithms). For example, if our features are well selected, we may be able to use simple rules to determine the final result, even without a model. For example, to determine whether a person is a man or a woman, suppose the computer does this task. First, various data are collected (features: name, height, hair length, birth place, smoking, etc .. Because according to statistics, we know that men are generally Women are taller, their hair is shorter than women, and they can smoke; so these features have a certain degree of discrimination, but there are always counterexamples. We may use the best algorithm and the accuracy rate may not reach 100%. Then perform feature extraction, Propose features that are meaningful to the target, delete irrelevant (hometown), and then pre-process, re-process the feature extraction results, the purpose is to enhance the representation ability of the features, to prevent the model (classifier) ​​from being too complicated and difficult to learn. Next It is the training data. Here we use supervised learning or unsupervised methods to fit the classifier model. The learner tries to fit the function between these data and the learning target by analyzing the rules of the data, so that the overall error defined on the training set As small as possible, so that the learned function can be used to predict the unknown data. As a result, the final results of the evaluation and improvement.

Figure 23 An example of the machine learning process

(7) Scope covered by machine learning

In terms of scope, machine learning is similar to pattern recognition, statistical learning, and data mining. At the same time, the combination of machine learning and processing technologies in other fields has formed interdisciplinary subjects such as computer vision, speech recognition, and natural language processing. Therefore, when it comes to data mining, it can be equated to machine learning. At the same time, what we usually say about machine learning applications should be general, not just limited to structured data, but also applications such as images and audio.

(1) Pattern recognition

Pattern recognition = machine learning. The main difference between the two is that the former is a concept developed from industry, and the latter is mainly derived from computer science. In the famous "Pattern Recognition And Machine Learning" book, Christopher M. Bishop said at the beginning: "Pattern recognition comes from industry, and machine learning comes from computer science. However, the activities in them can be regarded as two aspects of the same field. At the same time, in the past ten years, they Have made great progress. "

(2) Data mining

Data mining = machine learning + database. Data mining is just a way, not all data has value, data mining thinking is the key, plus a deep understanding of data, so that it is possible to derive patterns from the data to guide business improvement. Most of the algorithms in data mining are the optimization of machine learning algorithms in the database.

(3) Statistical learning

Statistical learning is approximately equal to machine learning. Statistical learning is a highly overlapping discipline with machine learning. Because most methods in machine learning come from statistics, it can even be argued that the development of statistics promotes the prosperity of machine learning. For example, the famous support vector machine algorithm is derived from the statistics department. The difference between the two is that statistical learners focus on the development and optimization of statistical models, partial mathematics, while machine learners focus more on problem solving and partial practice, so machine learning researchers will focus on learning algorithms in computers Improve the efficiency and accuracy of the implementation.

(4) Computer vision

Computer vision = image processing + machine learning. Image processing technology is used to process the image into an input suitable for entering the machine model, and machine learning is responsible for identifying relevant patterns from the image. There are many applications related to computer vision, such as Baidu image recognition, handwritten character recognition, license plate recognition and so on. This field will be a popular direction for future research. With the development of deep learning in the new field of machine learning, the effect of computer image recognition has been greatly promoted, so the future development prospect of the computer vision industry is inestimable.

(5) Voice recognition

Speech recognition = speech processing + machine learning. Speech recognition is a combination of audio processing technology and machine learning. Speech recognition technology is generally not used alone, and is generally combined with related technologies of natural language processing. Current related applications include Apple's voice assistant siri.

(6) Natural language processing

Natural language processing = text processing + machine learning. Natural language processing technology is mainly a field for machines to understand human language. In natural language processing technology, a large number of technologies related to compilation principles are used, such as lexical analysis, grammatical analysis, etc. In addition, at the level of understanding, technologies such as semantic understanding and machine learning are used. As the only symbol created by humans themselves, natural language processing has been the direction of continuous research in the machine learning world.

Figure 24 Scope of machine learning

(8) Main application scenarios of machine learning in industrial production

Machine learning, as the most effective implementation method of artificial intelligence, has been widely used in many scenarios such as industrial manufacturing. The following are five application scenarios of machine learning in industrial production.

1. Replacing visual inspection operations, realizing intelligent and unmanned manufacturing inspection

For example, the classification of engineering rock mass is mainly judged by experienced engineers through careful identification. The efficiency is relatively low, and different judgment deviations will occur due to different people. By adopting artificial intelligence, the experience of engineers is converted into deep learning algorithms. The accuracy of judgment is comparable to that of human judgment. After obtaining the corresponding weights, the APP is developed. In this way, after taking pictures with the tablet, the engineer can automatically obtain the project through the APP. The result of rock mass classification is highly efficient and accurate.

2. Significantly improve the operating performance of industrial robots, enhance the automation and unmanned manufacturing process

There are many operations in the industry that need to be sorted. If manual operations are used, the speed is slow and the cost is high, and it is necessary to provide a suitable working temperature environment.如果采用工业机器人的è¯ï¼Œå¯ä»¥å¤§å¹…å‡ä½Žæˆæœ¬ï¼Œæ高速度。例如图25所示的Bin Picking机器人。

图25 Bin Picking(零件分检)机器人

但是,一般需è¦åˆ†æ¡çš„零件并没有被整é½æ‘†æ”¾ï¼Œæœºå™¨äººè™½ç„¶æœ‰æ‘„åƒæœºçœ‹åˆ°é›¶ä»¶ï¼Œä½†å´ä¸çŸ¥é“如何把零件æˆåŠŸçš„æ¡èµ·æ¥ã€‚在这ç§æƒ…况下,利用机器å¦ä¹ ,先让工业机器人éšæœºçš„进行一次分æ¡åŠ¨ä½œï¼Œç„¶åŽå‘Šè¯‰å®ƒè¿™æ¬¡åŠ¨ä½œæ˜¯æˆåŠŸåˆ†æ¡åˆ°é›¶ä»¶è¿˜æ˜¯æŠ“空了,ç»è¿‡å¤šæ¬¡è®ç»ƒä¹‹åŽï¼Œæœºå™¨äººå°±ä¼šçŸ¥é“æŒ‰ç…§æ€Žæ ·çš„é¡ºåºæ¥åˆ†æ¡æ‰æœ‰æ›´é«˜çš„æˆåŠŸçŽ‡ï¼Œå¦‚图26所示。

图26 利用机器å¦ä¹ è¿›è¡Œæ•£å †æ‹¾å–

如图27所示,ç»è¿‡æœºå™¨å¦ä¹ åŽï¼Œæœºå™¨äººçŸ¥é“了分æ¡æ—¶å¤¹åœ†æŸ±çš„哪个ä½ç½®ä¼šæœ‰æ›´é«˜çš„æ¡èµ·æˆåŠŸçŽ‡ã€‚

图27 å¦ä¹ 次数越多准确性越高

如图28表明通过机器å¦ä¹ åŽï¼Œæœºå™¨äººçŸ¥é“æŒ‰ç…§æ€Žæ ·çš„é¡ºåºåˆ†æ¡ï¼ŒæˆåŠŸçŽ‡ä¼šæ›´é«˜ï¼Œå›¾ä¸æ•°å—是分æ¡çš„å…ˆåŽæ¬¡åºã€‚

图28 机器人确定分拣顺åº

如图29所示,ç»è¿‡8个å°æ—¶çš„å¦ä¹ åŽï¼Œæœºå™¨äººçš„分æ¡æˆåŠŸçŽ‡å¯ä»¥è¾¾åˆ°90%,和熟练工人的水平相当。

图29 分æ¡æˆåŠŸçŽ‡å¾—到大幅æå‡

3.工业机器人异常的æå‰æ£€çŸ¥ï¼Œä»Žè€Œæœ‰æ•ˆé¿å…机器故障带æ¥çš„æŸå¤±å’Œå½±å“

åœ¨åˆ¶é€ æµæ°´çº¿ä¸Šï¼Œæœ‰å¤§é‡çš„工业机器人。如果其ä¸ä¸€ä¸ªæœºå™¨äººå‡ºçŽ°äº†æ•…障,当人感知到这个故障时,å¯èƒ½å·²ç»é€ æˆå¤§é‡çš„ä¸åˆæ ¼å“,从而带æ¥ä¸å°çš„æŸå¤±ã€‚如果能在故障å‘生以å‰å°±æ£€çŸ¥çš„è¯ï¼Œå°±å¯ä»¥æœ‰æ•ˆå¾—åšå‡ºé¢„防,å‡å°‘æŸå¤±ã€‚如图30ä¸çš„工业机器人å‡é€Ÿæœºï¼Œå¦‚果给它们é…ä¸Šä¼ æ„Ÿå™¨ï¼Œå¹¶æå‰æå–它们æ£å¸¸ï¼ä¸æ£å¸¸å·¥ä½œæ—¶çš„波形,电æµç‰ä¿¡æ¯ï¼Œç”¨äºŽè®ç»ƒæœºå™¨å¦ä¹ 系统,那么è®ç»ƒå‡ºæ¥çš„模型就å¯ä»¥ç”¨æ¥æå‰é¢„è¦ï¼Œå®žé™…æ•°æ®è¡¨æ˜Žï¼Œæœºå™¨äººä¼šæ¯”人更早地预知到故障,从而é™ä½ŽæŸå¤±ã€‚

图30 工业机器人故障预测

如图9所示,ç»è¿‡æœºå™¨å¦ä¹ åŽï¼Œæ¨¡åž‹é€šè¿‡è§‚测到的波形,å¯ä»¥æ£€çŸ¥åˆ°äººå¾ˆéš¾æ„ŸçŸ¥åˆ°çš„细微å˜åŒ–,并在机器人彻底故障之å‰çš„数星期,就æ出有效的预è¦ã€‚图31是利用机器å¦ä¹ æ¥æå‰é¢„è¦ä¸»è½´çš„故障,一般情况下都是主轴出现问题åŽæ‰è¢«å‘现。

图31 主轴故障预测

4. PCB电路æ¿çš„辅助设计

任何一å—å°åˆ¶æ¿ï¼Œéƒ½å˜åœ¨ä¸Žå…¶ä»–结构件é…åˆè£…é…的问题,所以å°åˆ¶æ¿çš„外形和尺寸必须以产å“整机结构为ä¾æ®ï¼Œå¦å¤–还需è¦è€ƒè™‘到生产工艺,层数方é¢ä¹Ÿéœ€è¦æ ¹æ®ç”µè·¯æ€§èƒ½è¦æ±‚ã€æ¿åž‹å°ºå¯¸å’Œçº¿è·¯çš„密集程度而定。如果ä¸æ˜¯ç»éªŒä¸°å¯Œçš„技术人员,很难设计出åˆé€‚的多层æ¿ã€‚利用机器å¦ä¹ ,系统å¯ä»¥å°†æŠ€æœ¯äººå‘˜çš„ç»éªŒè½¬åŒ–为模型,从而æå‡PCB设计的效率与æˆåŠŸçŽ‡ï¼Œå¦‚图32所示。

图32 PCBæ¿è¾…助设计

5.快速高效地找出符åˆ3D模型的现实零件

例如工业上的3D模型设计完æˆåŽï¼Œéœ€è¦æ ¹æ®3D模型ä¸å‚数,寻找å¯å¯¹åº”的现实ä¸çš„é›¶ä»¶ï¼Œç”¨äºŽåˆ¶é€ å®žé™…çš„äº§å“。利用机器å¦ä¹ æ¥å®Œæˆè¿™ä¸ªä»»åŠ¡çš„è¯ï¼Œå¯ä»¥å¿«é€Ÿï¼Œé«˜åŒ¹é…率地找出符åˆ3D模型å‚数的那些现实零件。

图33æ˜¯æ ¹æ®3D模型设计的å‚数,机器å¦ä¹ 模型计算å„个现实零件与这些å‚数的类似度,从而ç›é€‰å‡ºåŒ¹é…的现实零件。没有使用机器å¦ä¹ 时,ç›é€‰çš„匹é…率大概是68%,也就是说,找出的现实零件ä¸æœ‰1ï¼3ä¸èƒ½æ»¡è¶³3D模型设计的å‚数,而使用机器å¦ä¹ åŽï¼ŒåŒ¹é…率高达96%。

图33 检索匹é…的零件

(ä¹ï¼‰æœºå™¨å¦ä¹ ä¸çš„日常生活场景

1.市民出行选乘公交预测

基于海é‡å…¬äº¤æ•°æ®è®°å½•ï¼Œå¸Œæœ›æŒ–掘市民在公共交通ä¸çš„行为模å¼ã€‚以市民出行公交线路选乘预测为方å‘,期望通过分æžå…¬äº¤çº¿è·¯çš„历å²å…¬äº¤å¡äº¤æ˜“æ•°æ®ï¼ŒæŒ–掘固定人群在公共交通ä¸çš„行为模å¼ï¼Œåˆ†æžæŽ¨æµ‹ä¹˜å®¢çš„å‡ºè¡Œä¹ æƒ¯å’Œå好,从而建立模型预测人们在未æ¥ä¸€å‘¨å†…将会æ乘哪些公交线路,为广大乘客æ供信æ¯å¯¹ç§°ã€å®‰å…¨èˆ’适的出行环境,用数æ®å¼•é¢†æœªæ¥åŸŽå¸‚智慧出行。

2.商å“图片分类

电商网站å«æœ‰æ•°ä»¥ç™¾ä¸‡è®¡çš„商å“图片,“æ‹ç…§è´â€â€œæ‰¾åŒæ¬¾â€ç‰åº”用必须对用户æ供的商å“图片进行分类。åŒæ—¶ï¼Œæå–商å“图åƒç‰¹å¾ï¼Œå¯ä»¥æ供给推èã€å¹¿å‘Šç‰ç³»ç»Ÿï¼Œæ高推èï¼å¹¿å‘Šçš„效果。希望通过对图åƒæ•°æ®è¿›è¡Œå¦ä¹ ,以达到对图åƒè¿›è¡Œåˆ†ç±»åˆ’分的目的。

3.基于文本内容的垃圾çŸä¿¡è¯†åˆ«

垃圾çŸä¿¡å·²æ—¥ç›Šæˆä¸ºå›°æ‰°è¿è¥å•†å’Œæ‰‹æœºç”¨æˆ·çš„难题,严é‡å½±å“到人们æ£å¸¸ç”Ÿæ´»ã€ä¾µå®³åˆ°è¿è¥å•†çš„社会形象以åŠå±å®³ç€ç¤¾ä¼šç¨³å®šã€‚而ä¸æ³•åˆ†åè¿ç”¨ç§‘技手段ä¸æ–更新垃圾çŸä¿¡å½¢å¼ä¸”ä¼ æ’途径éžå¸¸å¹¿æ³›ï¼Œä¼ 统的基于ç–ç•¥ã€å…³é”®è¯ç‰è¿‡æ»¤çš„效果有é™ï¼Œå¾ˆå¤šåžƒåœ¾çŸä¿¡â€œé€ƒè„±â€è¿‡æ»¤ï¼Œç»§ç»åˆ°è¾¾æ‰‹æœºç»ˆç«¯ã€‚希望基于çŸä¿¡æ–‡æœ¬å†…容,结åˆæœºå™¨å¦ä¹ 算法ã€å¤§æ•°æ®åˆ†æžæŒ–掘æ¥æ™ºèƒ½åœ°è¯†åˆ«åžƒåœ¾çŸä¿¡åŠå…¶å˜ç§ã€‚

4.国家电网客户用电异常行为分æž

éšç€ç”µåŠ›ç³»ç»Ÿå‡çº§ï¼Œæ™ºèƒ½ç”µåŠ›è®¾å¤‡çš„æ™®åŠï¼Œå›½å®¶ç”µç½‘å…¬å¸å¯ä»¥å®žæ—¶æ”¶é›†æµ·é‡çš„用户用电行为数æ®ã€ç”µåŠ›è®¾å¤‡ç›‘测数æ®ï¼Œå› æ¤ï¼Œå›½å®¶ç”µç½‘å…¬å¸å¸Œæœ›é€šè¿‡å¤§æ•°æ®åˆ†æžæŠ€æœ¯ï¼Œç§‘å¦çš„开展防窃电监测分æžï¼Œä»¥æ高å窃电工作效率,é™ä½Žçªƒç”µè¡Œä¸ºåˆ†æžçš„时间åŠæˆæœ¬ã€‚

5.自动驾驶场景ä¸çš„äº¤é€šæ ‡å¿—æ£€æµ‹

在自动驾驶场景ä¸ï¼Œäº¤é€šæ ‡å¿—的检测和识别对行车周围环境的ç†è§£èµ·ç€è‡³å…³é‡è¦çš„作用。例如通过检测识别é™é€Ÿæ ‡å¿—æ¥æŽ§åˆ¶å½“å‰è½¦è¾†çš„速度ç‰ï¼›å¦ä¸€æ–¹é¢ï¼Œå°†äº¤é€šæ ‡å¿—嵌入到高精度地图ä¸ï¼Œå¯¹å®šä½å¯¼èˆªä¹Ÿèµ·åˆ°å…³é”®çš„辅助作用。希望机é‡å®Œå…¨çœŸå®žåœºæ™¯ä¸‹çš„图片数æ®ç”¨äºŽè®ç»ƒå’Œæµ‹è¯•ï¼Œè®ç»ƒèƒ½å¤Ÿå®žé™…应用在自动驾驶ä¸çš„识别模型。

6.大数æ®ç²¾å‡†è¥é”€ä¸ç”¨æˆ·ç”»åƒæŒ–掘

在现代广告投放系统ä¸ï¼Œå¤šå±‚级æˆä½“系的用户画åƒæž„建算法是实现精准广告投放的基础技术之一。期望基于用户历å²ä¸€ä¸ªæœˆçš„查询è¯ä¸Žç”¨æˆ·çš„人å£å±žæ€§æ ‡ç¾ï¼ˆåŒ…括性别ã€å¹´é¾„ã€å¦åŽ†ï¼‰åšä¸ºè®ç»ƒæ•°æ®ï¼Œé€šè¿‡æœºå™¨å¦ä¹ ã€æ•°æ®æŒ–掘技术构建分类算法æ¥å¯¹æ–°å¢žç”¨æˆ·çš„人å£å±žæ€§è¿›è¡Œåˆ¤å®šã€‚

7.监控场景下的行人精细化识别

éšç€å¹³å®‰ä¸å›½ã€å¹³å®‰åŸŽå¸‚çš„æ出,视频监控被广泛应用于å„ç§é¢†åŸŸï¼Œè¿™ç»™ç»´æŠ¤ç¤¾ä¼šæ²»å®‰å¸¦æ¥äº†ä¾¿æ·ï¼›ä½†åŒæ—¶ä¹Ÿå¸¦æ¥äº†ä¸€ä¸ªé—®é¢˜ï¼Œå³æµ·é‡çš„视频监控æµä½¿å¾—å‘生çªå‘事故åŽï¼Œéœ€è¦è€—费大é‡çš„人力物力去æœç´¢æœ‰æ•ˆä¿¡æ¯ã€‚å¸Œæœ›åŸºäºŽç›‘æŽ§åœºæ™¯ä¸‹å¤šå¼ å¸¦æœ‰æ ‡æ³¨ä¿¡æ¯çš„行人图åƒï¼Œåœ¨å®šä½ï¼ˆå¤´éƒ¨ã€ä¸Šèº«ã€ä¸‹èº«ã€è„šã€å¸½åã€åŒ…ï¼‰çš„åŸºç¡€ä¸Šç ”ç©¶è¡Œäººç²¾ç»†åŒ–è¯†åˆ«ç®—æ³•ï¼Œè‡ªåŠ¨è¯†åˆ«å‡ºè¡Œäººå›¾åƒä¸è¡Œäººçš„属性特å¾ã€‚

8.需求预测与仓储规划方案

拥有海é‡çš„买家和å–家交易数æ®çš„情况下,利用数æ®æŒ–掘技术,我们能对未æ¥çš„商å“需求é‡è¿›è¡Œå‡†ç¡®åœ°é¢„测,从而帮助商家自动化很多供应链过程ä¸çš„决ç–。这些以大数æ®é©±åŠ¨çš„供应链能够帮助商家大幅é™ä½Žè¿è¥æˆæœ¬ï¼Œæ›´ç²¾ç¡®çš„需求预测,能够大大地优化è¿è¥æˆæœ¬ï¼Œé™ä½Žæ”¶è´§æ—¶æ•ˆï¼Œæå‡æ•´ä¸ªç¤¾ä¼šçš„供应链物æµæ•ˆçŽ‡ï¼Œæœæ™ºèƒ½åŒ–的供应链平å°æ–¹å‘æ›´åŠ è¿ˆè¿›ä¸€æ¥ã€‚高质é‡çš„商å“需求预测是供应链管ç†çš„åŸºç¡€å’Œæ ¸å¿ƒåŠŸèƒ½ã€‚

9.股价走势预测

éšç€ç»æµŽç¤¾ä¼šçš„å‘展,以åŠäººä»¬æŠ•èµ„æ„识的增强,人们越æ¥è¶Šå¤šçš„å‚与到股票市场的ç»æµŽæ´»åŠ¨ä¸ï¼Œè‚¡ç¥¨æŠ•èµ„也已ç»æˆä¸ºäººä»¬ç”Ÿæ´»çš„一个é‡è¦ç»„æˆéƒ¨åˆ†ã€‚然而在股票市场ä¸ï¼Œä¼—å¤šçš„æŒ‡æ ‡ã€ä¼—多的信æ¯ï¼Œå¾ˆéš¾æ‰¾å‡ºå¯¹è‚¡ä»·æ›´ä¸ºå…³é”®çš„å› ç´ ï¼›å…¶æ¬¡è‚¡å¸‚ç»“æž„æžä¸ºå¤æ‚,影å“å› ç´ å…·æœ‰å¤šæ ·æ€§ã€ç›¸å…³æ€§ã€‚这导致了很难找出股市内在的模å¼ã€‚希望在尽å¯èƒ½å…¨é¢çš„收集股市信æ¯çš„基础上,建立股价预测模。

10.地震预报

æ ¹æ®åŽ†å²å…¨çƒå¤§åœ°éœ‡çš„时空图,找出与ä¸å›½å¤§é™†å¤§åœ°éœ‡æœ‰å…³çš„14个相关区,对这些相关区é€ä¸€é‰´åˆ«ï¼Œé€‰å–较优的9个,å†æ ¹æ®è¿™9个相关区å‘生的大震æ¥é¢„测ä¸å›½å¤§é™†åœ¨æœªæ¥ä¸€å¹´å†…会ä¸ä¼šæœ‰å¤§éœ‡å‘生。

11.ç©¿è¡£æé…推è

ç©¿è¡£æé…是æœé¥°éž‹åŒ…导è´ä¸éžå¸¸é‡è¦çš„课题,基于æé…专家和达人生æˆçš„æé…组åˆæ•°æ®ï¼Œç™¾ä¸‡çº§åˆ«çš„商å“的文本和图åƒæ•°æ®ï¼Œä»¥åŠç”¨æˆ·çš„行为数æ®ã€‚期待能从以上行为ã€æ–‡æœ¬å’Œå›¾åƒæ•°æ®ä¸æŒ–掘穿衣æé…模型,为用户æ供个性化ã€ä¼˜è´¨çš„ã€ä¸“业的穿衣æé…方案,预测给定商å“çš„æé…商å“集åˆã€‚

12.ä¾æ®ç”¨æˆ·è½¨è¿¹çš„商户精准è¥é”€

éšç€ç”¨æˆ·è®¿é—®ç§»åŠ¨äº’è”ç½‘çš„ä¸Žæ—¥ä¿±å¢žï¼Œå¦‚ä½•æ ¹æ®ç”¨æˆ·çš„ç”»åƒå¯¹ç”¨æˆ·è¿›è¡Œç²¾å‡†è¥é”€æˆä¸ºäº†å¾ˆå¤šäº’è”网和éžäº’è”网ä¼ä¸šçš„æ–°å‘展方å‘ã€‚å¸Œæœ›æ ¹æ®å•†æˆ·ä½ç½®åŠåˆ†ç±»æ•°æ®ã€ç”¨æˆ·æ ‡ç¾ç”»åƒæ•°æ®æå–ç”¨æˆ·æ ‡ç¾å’Œå•†æˆ·åˆ†ç±»çš„å…³è”关系,然åŽæ ¹æ®ç”¨æˆ·åœ¨æŸä¸€æ®µæ—¶é—´å†…çš„ä½ç½®æ•°æ®ï¼Œåˆ¤æ–用户进入该商户地ä½èŒƒå›´300米内,则对用户推é€ç¬¦åˆè¯¥ç”¨æˆ·ç”»åƒçš„商户ä½ç½®å’Œå…¶ä»–ä¼˜æƒ ä¿¡æ¯ã€‚

13.气象关è”分æž

在社会ç»æµŽç”Ÿæ´»ä¸ï¼Œä¸å°‘行业,如农业ã€äº¤é€šä¸šã€å»ºç‘业ã€æ—…游业ã€é”€å”®ä¸šã€ä¿é™©ä¸šç‰ï¼Œæ— 一例外与天气的å˜åŒ–æ¯æ¯ç›¸å…³ã€‚为了更深入地挖掘气象资æºçš„价值,希望基于共计60å¹´çš„ä¸å›½åœ°é¢åŽ†å²æ°”象数æ®ï¼ŒæŽ¨åŠ¨æ°”象数æ®ä¸Žå…¶ä»–å„è¡Œå„业数æ®çš„有效结åˆï¼Œå¯»æ±‚气象è¦ç´ 之间ã€ä»¥åŠæ°”象与其它事物之间的相互关系,让气象数æ®å‘挥更多元化的价值。

14.交通事故æˆå› 分æž

éšç€æ—¶ä»£å‘展,便æ·äº¤é€šå¯¹ç¤¾ä¼šäº§ç”Ÿå·¨å¤§è´¡çŒ®çš„åŒæ—¶ï¼Œå„类交通事故也严é‡åœ°å½±å“了人们生命财产安全和社会ç»æµŽå‘展。希望通过对事故类型ã€äº‹æ•…人员ã€äº‹æ•…车辆ã€äº‹æ•…天气ã€é©¾ç…§ä¿¡æ¯ã€é©¾é©¶äººå‘˜çŠ¯ç½ªè®°å½•æ•°æ®ä»¥åŠå…¶ä»–和交通事故有关的数æ®è¿›è¡Œæ·±åº¦æŒ–掘,形æˆäº¤é€šäº‹æ•…æˆå› 分æžæ–¹æ¡ˆã€‚

15.基于兴趣的实时新闻推è

éšç€è¿‘å¹´æ¥äº’è”网的飞速å‘展,个性化推èå·²æˆä¸ºå„大主æµç½‘站的一项必ä¸å¯å°‘æœåŠ¡ã€‚æä¾›å„类新闻的门户网站是互è”ç½‘ä¸Šçš„ä¼ ç»ŸæœåŠ¡ï¼Œä½†æ˜¯ä¸Žå½“今蓬勃å‘展的电å商务网站相比,新闻的个性化推èæœåŠ¡æ°´å¹³ä»å˜åœ¨è¾ƒå¤§å·®è·ã€‚å¸Œæœ›é€šè¿‡å¯¹å¸¦æœ‰æ—¶é—´æ ‡è®°çš„ç”¨æˆ·æµè§ˆè¡Œä¸ºå’Œæ–°é—»æ–‡æœ¬å†…容进行分æžï¼ŒæŒ–掘用户的新闻æµè§ˆæ¨¡å¼å’Œå˜åŒ–规律,设计åŠæ—¶å‡†ç¡®çš„推è系统预测用户未æ¥å¯èƒ½æ„Ÿå…´è¶£çš„新闻。

å››ã€æ·±åº¦å¦ä¹ :机器å¦ä¹ 的更高智能进阶

1.深度å¦ä¹ 的背景

2006å¹´ï¼ŒåŠ æ‹¿å¤§å¤šä¼¦å¤šå¤§å¦æ•™æŽˆã€æœºå™¨å¦ä¹ 领域的泰斗Geoffrey Hintonå’Œå¦ç”ŸSalakhutdinov在Science上å‘è¡¨æ–‡ç« ã€ŠReducing the Dimensionalitg of Data with Neural Neworksã€‹ï¼Œè¿™ç¯‡æ–‡ç« æœ‰ä¸¤ä¸ªä¸»è¦è§‚点:1)多éšå±‚神ç»ç½‘络有更厉害的å¦ä¹ 能力,å¯ä»¥è¡¨è¾¾æ›´å¤šç‰¹å¾æ¥æ述对象;2)è®ç»ƒæ·±åº¦ç¥žç»ç½‘络时,å¯é€šè¿‡é™ç»´ï¼ˆpreï¼training)æ¥å®žçŽ°ï¼Œè€æ•™æŽˆè®¾è®¡å‡ºæ¥çš„Autoencoderç½‘ç»œèƒ½å¤Ÿå¿«é€Ÿæ‰¾åˆ°å¥½çš„å…¨å±€æœ€ä¼˜ç‚¹ï¼Œé‡‡ç”¨æ— ç›‘ç£çš„方法先分开对æ¯å±‚网络进行è®ç»ƒï¼Œç„¶åŽå†æ¥å¾®è°ƒã€‚è¯¥æ–‡ç« çš„å‘表翻开了深度å¦ä¹ çš„æ–°ç¯‡ç« ã€‚2013å¹´4月,深度å¦ä¹ 技术被《麻çœç†å·¥å¦é™¢æŠ€æœ¯è¯„论》(MIT TechnologyReview)æ‚志列为2013å¹´å大çªç ´æ€§æŠ€æœ¯ï¼ˆBreakthrough Technology) 之首。与浅层å¦ä¹ 模型ä¾èµ–人工ç»éªŒä¸åŒï¼Œæ·±å±‚å¦ä¹ 模型通过构建机器å¦ä¹ 模型和海é‡çš„è®ç»ƒæ•°æ®ï¼Œæ¥å¦ä¹ 更有用的特å¾ï¼Œä»Žè€Œæœ€ç»ˆæå‡åˆ†ç±»æˆ–预测的准确性。

图34 深度å¦ä¹ çš„å‘展历程

2.深度å¦ä¹ 的定义

深度å¦ä¹ 是机器å¦ä¹ ç ”ç©¶é¢†åŸŸçš„åˆ†æ”¯ï¼Œéš¶å±žäºŽç¥žç»ç½‘络体系。深度å¦ä¹ 通过建立ã€æ¨¡æ‹Ÿäººè„‘çš„ä¿¡æ¯å¤„ç†ç¥žç»ç»“æž„æ¥å®žçŽ°å¯¹å¤–部输入的数æ®è¿›è¡Œä»Žä½Žçº§åˆ°é«˜çº§çš„特å¾æå–,从而能够使机器ç†è§£å¦ä¹ æ•°æ®ï¼ŒèŽ·å¾—ä¿¡æ¯ï¼Œå› 具有多个éšè—层的神ç»ç½‘络åˆè¢«ç§°ä¸ºæ·±åº¦ç¥žç»ç½‘络。深度å¦ä¹ 将数æ®è¾“入系统åŽï¼Œé€šè¿‡å»ºæ¨¡åŠæ¨¡æ‹Ÿäººè„‘的神ç»ç½‘从而进行å¦ä¹ 的技术,åƒç”Ÿç‰©ç¥žç»å…ƒä¸€æ ·ï¼Œç¥žç»ç½‘络系统ä¸æœ‰ç³»åˆ—分层排列的模拟神ç»å…ƒï¼ˆä¿¡æ¯ä¼ 递的连接点),且ç»è¿‡æ¯ä¸ªç¥žç»å…ƒçš„å“应函数都会分é…一个相应的“æƒå€¼â€ï¼Œè¡¨ç¤ºå½¼æ¤ä¹‹é—´çš„连接强度。通过æ¯å±‚神ç»å…ƒç›¸äº’“连接â€ï¼Œè®¡ç®—机就å¯ä»¥ç”±è¾¾åˆ°æœ€ä½³æ–¹æ¡ˆæ—¶æ‰€æœ‰ç¥žç»å…ƒçš„åŠ æƒå’Œï¼Œä»Žè€Œå¯ä»¥å®žçŽ°è¿™ä¸€å†³ç–方案。

3.深度å¦ä¹ 的基础和实现

①深度å¦ä¹ çš„æ€æƒ³åŸºç¡€ä¸€è¯¯å·®é€†ä¼ æ’算法(BP算法)

BP神ç»ç½‘络(如图35) 是1986å¹´Rumelhartå’ŒMcClellandç‰äººæ出的,是一ç§æŒ‰è¯¯å·®é€†ä¼ æ’算法è®ç»ƒçš„多层å‰é¦ˆç¥žç»ç½‘络,它å˜å‚¨å¤§é‡æ˜ 射模å¼å…³ç³»ï¼Œæ— 需æç¤ºå…¶æ˜ å°„æ–¹ç¨‹ã€‚BPç®—æ³•çš„æ ¸å¿ƒæ€æƒ³æ˜¯é‡‡ç”¨æœ€é€Ÿä¸‹é™æ³•ï¼ˆæ¢¯åº¦ä¸‹é™æ³•ï¼‰ï¼Œé€šè¿‡åå‘ä¼ æ’调试网络的æƒå€¼å’Œé˜ˆå€¼ï¼Œä½¿å¾—其误差平方和最å°ã€‚

图35 BP神ç»ç½‘络

②图åƒå¤„ç†é¢†åŸŸçš„里程碑一å·ç§¯ç¥žç»ç½‘络(CNN)

20世纪60年代,Hubelå’ŒWieselåœ¨ç ”ç©¶çŒ«è„‘çš®å±‚ä¸ç”¨äºŽå±€éƒ¨æ•æ„Ÿå’Œæ–¹å‘选择的神ç»å…ƒæ—¶å‘现网络结构å¯ä»¥é™ä½Žå馈神ç»ç½‘络的å¤æ‚性,进而æ出了å·ç§¯ç¥žç»ç½‘络的概念。由于其é¿å…了对图åƒçš„å‰æœŸé¢„处ç†ï¼Œå¯ä»¥ç›´æŽ¥è¾“入原始图åƒï¼ŒCNNå·²ç»æˆä¸ºç¥žç»ç½‘ç»œçš„æ ‡å¿—æ€§ä»£è¡¨ä¹‹ä¸€ã€‚

图36 å·ç§¯ç¥žç»ç½‘络(CNN)

③深度神ç»ç½‘络的实现基础一玻尔兹曼机和å—é™çŽ»å°”兹曼机

玻尔兹曼机是Hintonå’ŒSejnowskiæ出的éšæœºé€’归神ç»ç½‘络,也å¯ä»¥çœ‹åšæ˜¯éšæœºçš„Hopfieldç½‘ç»œï¼Œå› æ ·æœ¬åˆ†å¸ƒéµå¾ªçŽ»å°”兹曼分布而命å为BM。

图37 玻尔兹曼机

4.深度å¦ä¹ çš„é‡å¤§æˆå°±

利用机器å¦ä¹ ,人工智能系统获得了归纳推ç†å’Œå†³ç–能力;而深度å¦ä¹ 更将这一能力推å‘了更高的层次。目å‰ï¼Œåœ¨æ·±åº¦å¦ä¹ ä¸ï¼Œå·ç§¯ç¥žç»ç½‘络(Convolutional Neural Network,简称CNN)作为最有效的深层神ç»ç½‘络,现在已ç»è¢«è¶Šæ¥è¶Šå¤šåœ°åº”用到许多智能领域之ä¸ï¼Œå¹¶ä¸”它们越æ¥è¶Šåƒäººç±»äº†ï¼Œä¾‹å¦‚AlphaGoã€SIRIå’ŒFACEBOOKç‰éƒ½åº”用了å·ç§¯ç¥žç»ç½‘络。在ä¸å›½ç›®å‰éžå¸¸å…³æ³¨çš„æ™ºèƒ½åˆ¶é€ é¢†åŸŸä¸ï¼Œåˆ¶é€ 机器人是深度å¦ä¹ çš„ç»å…¸æ¡ˆä¾‹ï¼Œæ·±åº¦å¦ä¹ 的机器人能够自动适应外部环境的å˜åŒ–,é¢å¯¹æ–°åž‹ä»»åŠ¡æ—¶å¯ä»¥è‡ªåŠ¨é‡æ–°è°ƒæ•´ç®—法和技术,

5.深度å¦ä¹ çš„å‘展展望

深度å¦ä¹ å¿…å°†æˆä¸ºäººå·¥æ™ºèƒ½å‘å±•çš„æ ¸å¿ƒé©±åŠ¨åŠ›ã€‚è™½ç„¶æ·±åº¦å¦ä¹ 在实际应用ä¸å–得了许多æˆå°±ï¼Œä½†æ˜¯ä»æœ‰å±€é™æ€§ï¼šç†è®ºç ”究缺ä¹ã€æ— 监ç£å¦ä¹ 能力弱ã€ç¼ºå°‘逻辑推ç†å’Œè®°å¿†èƒ½åŠ›ç‰ã€‚深度å¦ä¹ çš„ç ”ç©¶å¤šæ˜¯åŸºäºŽå®žéªŒè®ç»ƒè¿›è¡Œçš„,但是对其内部原ç†ï¼Œå¦ä¹ æœ¬è´¨ç ”ç©¶å¾ˆå°‘ã€‚çŽ°åœ¨çš„ç ”ç©¶å¤šæ˜¯åœ¨ç½‘ç»œæž¶æž„ã€å‚数选择ç‰æ–¹é¢ï¼Œè€Œä¸”深度å¦ä¹ 的还有进一æ¥æå‡ç©ºé—´ï¼Œä¹Ÿéœ€è¦æ›´åŠ 完备深入的ç†è®ºæ”¯æ’‘å…¶å‘展。

ç›®å‰ä¸»æµåº”用还是以监ç£å¦ä¹ 为主的,但在实际生活ä¸ï¼Œæ— æ ‡ç¾æœªçŸ¥çš„æ•°æ®å 主体,所以更应该应用å¯ä»¥å‘çŽ°äº‹ç‰©å†…åœ¨å…³ç³»çš„æ— ç›‘ç£å¦ä¹ ,未æ¥è¿˜æœ‰æ›´å¹¿é˜”çš„å‘展空间。深度å¦ä¹ 是人工智能å‘展的巨大推力,目å‰é˜¶æ®µä¸æ·±åº¦å¦ä¹ 更侧é‡äºŽå¤„ç†æ•°æ®ï¼Œåœ¨é¢å¯¹æ›´å¤æ‚的任务时,则需è¦æ›´å¤šè®°å¿†èƒ½åŠ›å’Œé€»è¾‘推ç†èƒ½åŠ›ã€‚

五:机器å¦ä¹ 的未æ¥ï¼šæŒ‘战与机é‡å¹¶å˜

机器å¦ä¹ 是人工智能应用的åˆä¸€é‡è¦ç ”究领域。当今,尽管在机器å¦ä¹ 领域已ç»å–å¾—é‡å¤§æŠ€æœ¯è¿›å±•ï¼Œä½†å°±ç›®å‰æœºå™¨å¦ä¹ å‘展现状而言,自主å¦ä¹ 能力还å分有é™ï¼Œè¿˜ä¸å…·å¤‡ç±»ä¼¼äººé‚£æ ·çš„å¦ä¹ 能力,åŒæ—¶æœºå™¨å¦ä¹ çš„å‘展也é¢ä¸´ç€å·¨å¤§çš„挑战,诸如泛化能力ã€é€Ÿåº¦ã€å¯ç†è§£æ€§ä»¥åŠæ•°æ®åˆ©ç”¨èƒ½åŠ›ç‰æŠ€æœ¯æ€§éš¾å…³å¿…须克æœã€‚但令人å¯å–œçš„是,在æŸäº›å¤æ‚的类人神ç»åˆ†æžç®—法的开å‘领域,计算机专家已ç»å–得了很大进展,人们已ç»å¯ä»¥å¼€å‘出许多自主性的算法和模型让机器展现出高效的å¦ä¹ 能力。对机器å¦ä¹ 的进一æ¥æ·±å…¥ç ”究,势必推动人工智能技术的深化应用与å‘展。

Quartz windows are usually cut and ground from quartz glass, and their silica content can reach more than 99.99%. The hardness is seven grades, and it has the characteristics of high temperature resistance, low thermal expansion coefficient, thermal shock resistance and good electrical insulation performance. The visible light transmittance of the quartz window is over 85%. Quartz glass can be divided into two categories, fused silica glass and synthetic silica glass, from the large production range. Heat resistance, light transmittance, electrical insulation, and chemical stability are all very good.

Due to the various characteristics of quartz glass, it is shown that it is an indispensable and excellent material in modern cutting-edge technology, atomic energy industry, automation system, as well as semiconductor, metallurgy, chemical industry, communications, lasers, optical instruments, laboratory instruments, medical equipment and national defense industries. One, the application is very wide.

Coating Window,Protective Windows,Wedge Window,Uv Fused Silica Window

Bohr Optics Co.,Ltd , https://www.bohr-optics.com