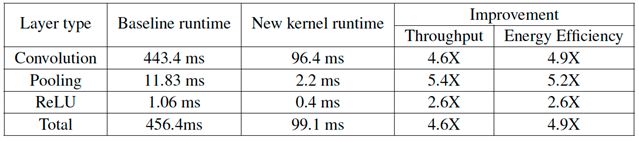

At present, in many "always-on" IoT edge devices that need to perform local data analysis, neural networks are becoming more and more popular, mainly because they can effectively reduce the delay and power consumption caused by data transmission. When it comes to neural networks on IoT edge devices, we naturally think of the Arm Cortex-M series processor cores, so if you want to enhance its performance and reduce memory consumption, CMSIS-NN is your best choice. Neural network inference based on the CMSIS-NN kernel will have a 4.6X improvement in runtime/throughput and a 4.9X improvement in energy efficiency.

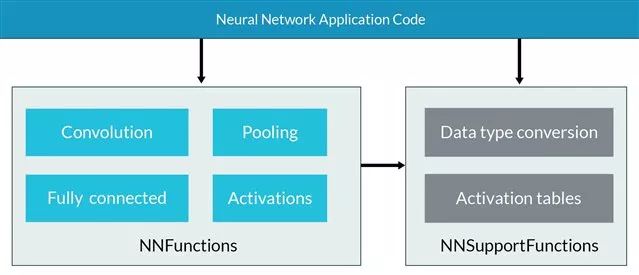

The CMSIS-NN library consists of two parts: NNFunction and NNSupportFunctions. NNFunction contains functions that implement the usual neural network layer types, such as convolution, depthwise separable convolution, full join (ie inner-product), pooling and activation. These functions are used by application code to implement neural network inference applications. The kernel API is also kept simple, so it can be easily redirected to any machine learning framework. The NNSupport function includes different utility functions, such as the data conversion and activation menus used in NNFunctions. These utility functions can also be used by application code to construct more complex NN modules, such as long-term short-term memory (LSTM) or gated loop units (GRU).

For some kernels (such as full joins and convolutions), different versions of kernel functions are used. Arm provides a basic version that can be used "as is" for any layer parameter. We have also deployed other versions, including further optimization techniques, but will convert the input or have some restrictions on the layer parameters. Ideally, you can use a simple script to analyze your network topology and automatically determine which function to use.

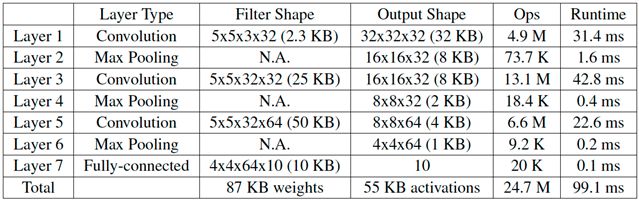

We tested the CMSIS-NN kernel on a Convolutional Neural Network (CNN) and trained on the CIFAR-10 dataset, including 60,000 32x32 color images, divided into 10 output classes. The network topology is based on the built-in example provided in Caffe with three convolution layers and one fully connected layer. The following table shows the layer parameters and detailed runtime results using the CMSIS-NN core. The test was performed on an ARM Cortex-M7 core STMichelectronics NUCLEO-F746ZG mbed development board running at 216 MHz.

The entire image classification takes approximately 99.1 milliseconds per image (equivalent to 10.1 images per second). The CPU running this network has a computational throughput of approximately 249 MOps per second. The pre-quantized network achieved an accuracy of 80.3% on the CIFAR-10 test set. The 8-bit quantized network running on the ARM Cortex-M7 core achieves an accuracy of 79.9%. The maximum memory footprint for using the CMSIS-NN kernel is ~133 KB, where local im2col is used to implement convolution to save memory and then matrix multiplication. The memory usage without local im2col will be ~332 KB, so the neural network will not run on the board.

To quantify the benefits of the CMSIS-NN core over existing solutions, we also implemented a benchmark version using a one-dimensional convolution function (arm_conv from CMSIS-DSP), similar to Caffe's pooling and ReLU. For CNN applications, the following table summarizes the comparison of the benchmark function with the CMSIS-NN core. The runtime/throughput of the CMSIS-NN core is 2.6 to 5.4 times higher than the benchmark function, and the energy efficiency improvement is also consistent with the throughput improvement.

An efficient NN core is the key to getting the most out of the Arm Cortex-M CPU. CMSIS-NN provides optimized functions to accelerate key NN layers such as convolution, pooling and activation. In addition, it is critical that CMSIS-NN also helps reduce the memory footprint that is critical for microcontrollers with limited memory.

Voom Pod 1500 Vape is so convenient, portable, and small volume, you just need to take them

out of your pocket and take a puff, feel the cloud of smoke, and the fragrance of fruit surrounding you. It's so great.

We are the distributor of the Vapesoul & Voom vape brand, we sell vapesoul disposable vape,vapesoul vape bar, voom vape disposable, and so on.

We are also China's leading manufacturer and supplier of Disposable Vapes puff bars, disposable vape kit, e-cigarette

vape pens, and e-cigarette kit, and we specialize in disposable vapes, e-cigarette vape pens, e-cigarette kits, etc.

voom pod 1500 vape device,voom pod 1500 vape pen,voom pod 1500 vape disposable,voom pod 1500 vape system,voom pod 1500 vape starter kit

Ningbo Autrends International Trade Co.,Ltd. , https://www.mosvapor.com