The ideal embedded software has always been designed with security and protection in mind. However, "networking" has brought intolerable security vulnerabilities in security-critical applications such as medical, autonomous driving and Internet of Things (IoT) devices.

The tight combination of security and protection, coupled with increased threats, requires developers to fully understand the difference between security and protection, and apply industry best practices from the outset to ensure that both are designed into the product. Medium (Figure 1).

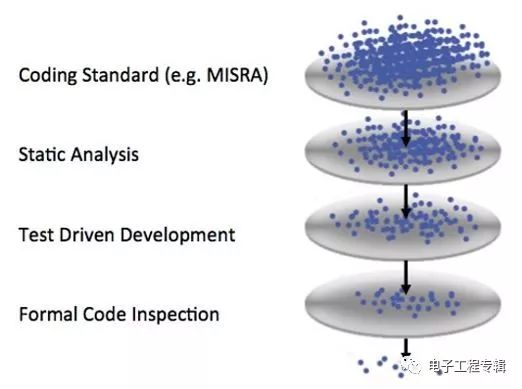

Figure 1: Filter Defects: Ideal software and hardware design requires multi-level quality assurance, defense, and security throughout the design process. (Source: Barr Group)

Poor design impactWith the rise of the Internet of Things, the system is now vulnerable to "remote attacks." A recent incident involving the Sony Cyber ​​Security camera was found to have a backdoor account. These ports may be used by hackers to infect the system with botnet malware and launch more attacks. Sony has therefore developed a firmware patch that users can download to close the back door. But in the example, there are many coding or design errors that are unrecoverable and can have catastrophic consequences.

To prove this, two security researchers used to remotely “black†a running Jeep Grand Cherokee, taking over dashboard functions, steering wheels, transmissions and brakes. Of course, this "hijacking" is not malicious, but rather the driver's permission to show how easy it is to easily attack the network operator's Internet. Despite this, the hacking experiment led Chrysler to recall 1.4 million vehicles.

Of course, the system doesn't have to be connected to the Internet to be vulnerable and insecure: poorly written embedded code and design decisions have caused such damage. For example, the Therac-25 radiotherapy machine for cancer treatment launched in 1983 is a classic case study of what errors should be avoided in system design. A combination of factors such as software errors, lack of hardware interlocks, and poor overall design decisions have resulted in fatal radiation doses.

The culprit that caused the Therac-25 to cause a fatal accident included:

• Immature and inadequate software development process ("untested software")

• Incomplete reliability modeling and failure mode analysis

• Not conducted (independent) review for critical software

• Improper reuse of older software

One of the main failure modes involves a 1-byte counter in a test routine that overflows frequently. If the operator provides manual input to the machine when it overflows, the use of software-based interlocks will fail.

In June 1996, the European Space Agency's rocket Ariane 5 (Flight 501) deviated from its scheduled flight plan after launch, and had to detonate self-destruction, which was due to the omission of spill check in order to hurry. When a variable that maintains horizontal speed overflows, it cannot detect and respond appropriately.

Despite this, critical program code and security vulnerabilities remain unreviewed. In fact, the Barr Group's 2017 Embedded Systems Security and Security Survey shows that in an engineer's project, if a project connected to the Internet is hacked, it will hang up:

• 22% did not consider safety performance as a design requirement

• 19% did not follow the coding standard

• 42% have no or only occasional code review

• 48% of people do not encrypt their communications over the Internet

• More than 33% did not perform static analysis.

Understanding the true meaning of safety and protection is an important step towards bridging this situation.

Define security and protectionThe words safety and security are often mixed. Some developers often have the misconception that if you can write good code, the project will be safe and protected. But obviously not.

A "safe" system is one in which the system itself does not harm the user or anyone else during normal operation. A "safety critical" system is a system that can cause injury or injury in the event of a malfunction. Therefore, the designer's goal is to ensure that the system is not as faulty or flawed as possible.

On the other hand, "protection" focuses on the ability of products to protect unauthorized users (such as hackers) while their authorized users use their assets. These assets include mobile or dynamic data, code and intellectual property (IP), processor and system control centers, communication ports, memory, and memory with static data.

It should be clearer now that although the system can be protected, it does not necessarily have automatic security: dangerous systems can be as protective as safe and reliable systems. However, an unprotected system is always insecure, because even if its function is safe at first, it is vulnerable to unauthorized intrusion, meaning it may become unsafe at any time.

Achieve safety and protection design

When it comes to design security, there are many factors to consider, as in the case of Therac-25. However, designers can only control their design aspects, and this article focuses on firmware.

A good example of a mission-critical application is a modern car. There may be more than 100 million lines of code in these vehicles, but they are in the hands of users (drivers) who often lack training or distraction. In order to reinforce the needs of these users, more security features and codes have been added in the form of cameras and sensors, as well as vehicle-to-infrastructure (V2I) and vehicle-to-vehicle (V2V) communications. The amount of code is increasing, and it is growing exponentially!

Although massive code makes coding and debugging of such systems more difficult, if you follow some core principles, you can save most of the debugging time, for example:

• Hardware/software distribution that impacts real-time performance, cost, scalability, security, reliability, and security

• Implement a fault-tolerant area.

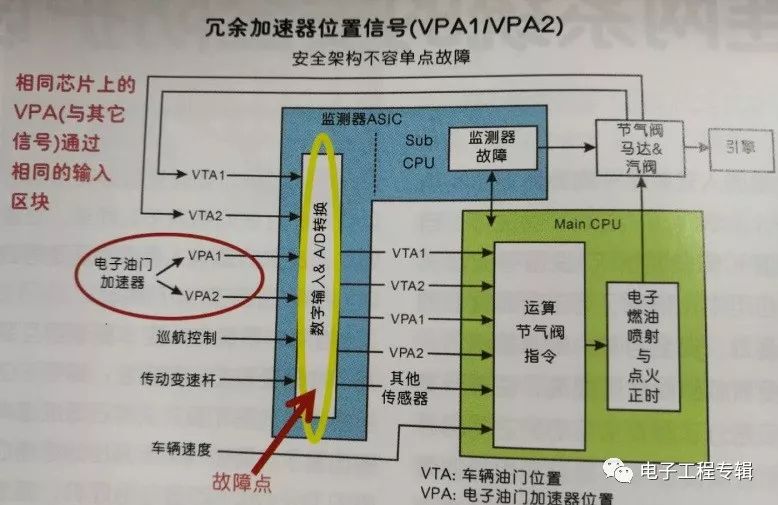

• Avoid single points of failure (Figure 2)

• Handling exceptions caused by code errors, the program itself, memory management, or spurious interrupts

• Include overflow checks (Therac-25 and Ariane rockets are omitted)

• Clean up pollution data from outside sources (use range check and CRC).

• Test at each level (unit test, integration test, system test, blurring, checksum verification, etc.)

Figure 2: Safety critical systems avoid single points of failure. (Source: Phil Koopman, Professor, Carnegie Mellon University, USA)

Figure 2: Safety critical systems avoid single points of failure. (Source: Phil Koopman, Professor, Carnegie Mellon University, USA)

For security reasons, designers or developers need to be familiar with the complexity of user and device authentication, public key infrastructure (PKI), and data encryption. In addition to providing assets to authorized users and protecting assets from unauthorized access, security also means that the system does not do things that are unsafe or unpredictable in the face of an attack or failure.

Of course, attacks come in a variety of forms, including basic denial of service (DoS) and distributed DoS (DDoS). Although developers can't control what attacks the system is exposed to, they can control the system's response to the attack, and this response must be implemented system-wide. The weakest link in the system determines the overall security of the system, and it is wise to assume that the attacker will find the weak link.

One example of a weak link is the Remote Firmware Update (RFU), which attacks the system through the device's remote firmware update feature. The system at this time is very vulnerable, so it is wise to equip the defense strategy, for example: let the user choose whether to disable the RFU or load an update that needs to be digitally signed for subsequent images.

This seems to be the opposite of intuitive thinking, but cryptography is basically not the weakest link. Instead, attackers look for other vulnerable attack surfaces due to implementation, protocol protection, APIs, use cases, and side channel attacks.

How much work, time, and resources are invested in these areas depends on the type of threat, and each threat has specific precautions. Developers can take some common actions to improve the product's anti-attack capabilities:

• Use a microcontroller without external memory

• Disable the JTAG interface.

• Implement a secure start.

• Generate a device-specific key for each unit using the master key

• Use target code confusion

• Implement Power On Self Test (POST) and Built-In Self Test (BIST)

When it comes to "confusion," there is a theory that advocates "security through obscurity." But relying on that idea can be fatal, because each secret creates a potential "soft rib." Whether through social engineering, dissatisfied employees, or through techniques such as dumping and reverse engineering, secrets will no longer be secret. Of course, hidden protection comes in handy, for example to keep the key secret.

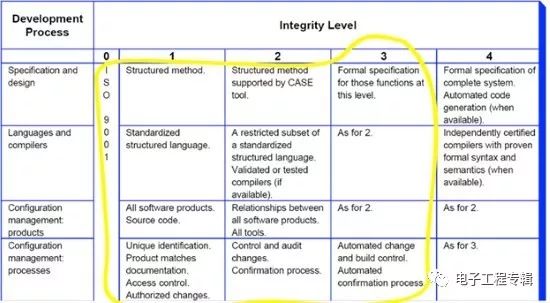

Ensure safety and protectionWhile there are many techniques and techniques to help developers and designers achieve a high degree of security and protection, there are some basic steps to ensure that the system is optimized as reasonably practicable. First, design based on "tested" coding rules, functional safety, industry and application-specific standards. These guidelines include MISRA and MISRA-C, ISO 26262, Auto Open System Architecture (Autosar), IEC 60335 and IEC 60730.

Using coding standards like MISRA not only helps to avoid errors, but also makes the code more readable, consistent, and portable (Figure 3).

Figure 3: Using coding standards like MISRA not only helps to avoid errors, it also makes the code more readable, consistent, and portable (Figure 3). (Source: Barr Group)

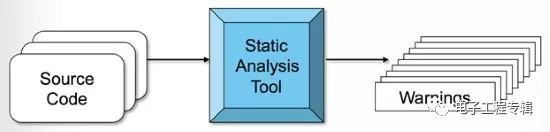

Second, use static analysis (Figure 4). This involves analyzing the software, not the execution program. It is a symbolic execution, so it is essentially a simulation. In contrast, dynamic analysis will find defects when running actual code on the target platform.

Figure 4: The static analysis tool runs the "simulation", syntax, and logic analysis of the source file and outputs a warning instead of the target file. (Source: Barr Group)

While static analysis is not a panacea, it does add another layer of assurance because it is good at detecting potential errors; for example, using uninitialized variables, possible integer overflow/underflow, and signed and unsigned data types. Mixed. In addition, static analysis tools are constantly improving.

In general, static analysis means using specialized tools (such as PC-Lint or Coverity), but developers should also consider re-analysing their own code.

Third, conduct a code review. This will improve the correctness of the code while also contributing to maintainability and scalability. Code review also helps with recall/warranty repairs and product liability claims.

Fourth, conduct threat modeling. Start by using the attack tree. This requires the developer to think like an attacker and do the following:

• Identify the target of the attack:

o Each attack has a separate tree

• For each tree (target):

o Identify different attacks

o Determine the steps and options for each attack

It is worth noting that if such analysis is performed from multiple angles, the benefits can be greatly improved.

Who has time to do it right?Obviously, performing the four basic steps above can easily reduce errors and increase security and protection; but it takes time, so developers must budget accordingly. Although the scale of the project is different, it is important to be as practical as possible.

For example, add 15% to 50% of design time to facilitate code review. Some systems require full code review; some do not. Static analysis tools can take 10 to hundreds of hours to set up initially, but once they enter a part or stage of the development process, product development takes no extra time to develop the product, and they end up with a better system.

Networking technology adds new concerns to embedded system design, which requires special emphasis on security and protection. A detailed understanding of these two concepts, combined with the appropriate application of best practices at the beginning of the design cycle, can greatly improve the overall safety and protection of the product. These best practices include adoption of coding standards, use of static analysis tools, code review, and threat modeling.

Euroblock, short for "European-style terminal block"; is a combination of a low-voltage disconnect (or pluggable) connector and terminal block commonly used for microphones and line-level audio signals , and for control signals such as RS-232 or RS-485. It is also known as the Phoenix connector and comes from a German Phoenix Electric Company, which was established in 1981 in Harrisburg, Pennsylvania, USA. Also known as "Plug-in Terminal Blocks" or "Two-Piece Terminal Blocks".

Eurostyle Terminal Blocks,Eurostyle Terminal Blocks High Temperature,Eurostyle Terminal Blocks Kit,Eurostyle Terminal Blocks Heat Resistant

Sichuan Xinlian electronic science and technology Company , https://www.sztmlchs.com